Picture a busy restaurant kitchen on a Saturday night. Orders are flying in. Instead of yelling every single order at the chefs and overwhelming them, a simple ticket rail holds the incoming chits. The chefs can grab the next ticket whenever they're ready, working at a steady, manageable pace.

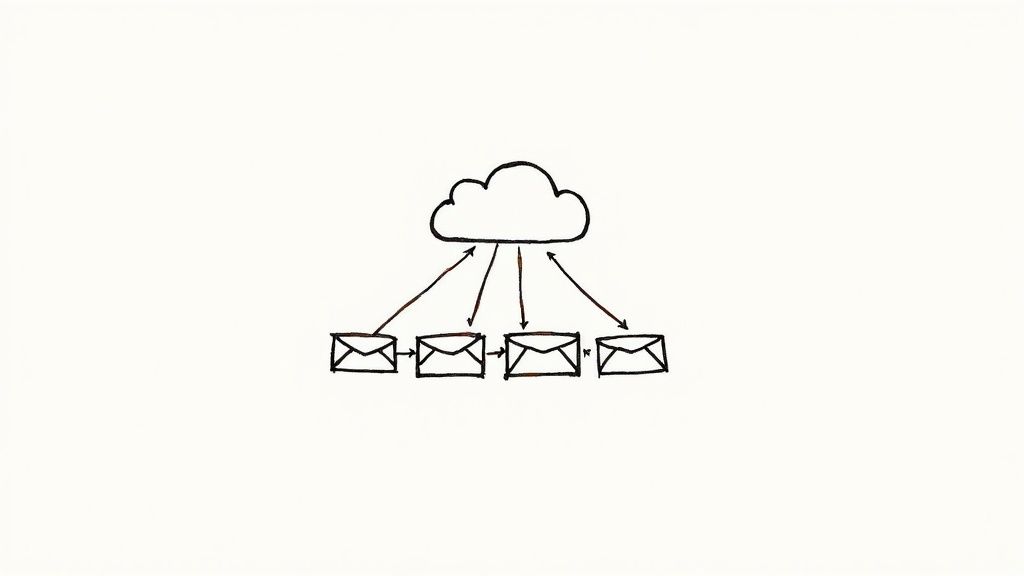

Azure Storage Queue is that ticket rail for your cloud application. It’s a beautifully simple service designed to hold a massive number of messages, allowing different parts of your system to process that work asynchronously, right when they have the capacity.

Understanding the Purpose of an Azure Storage Queue

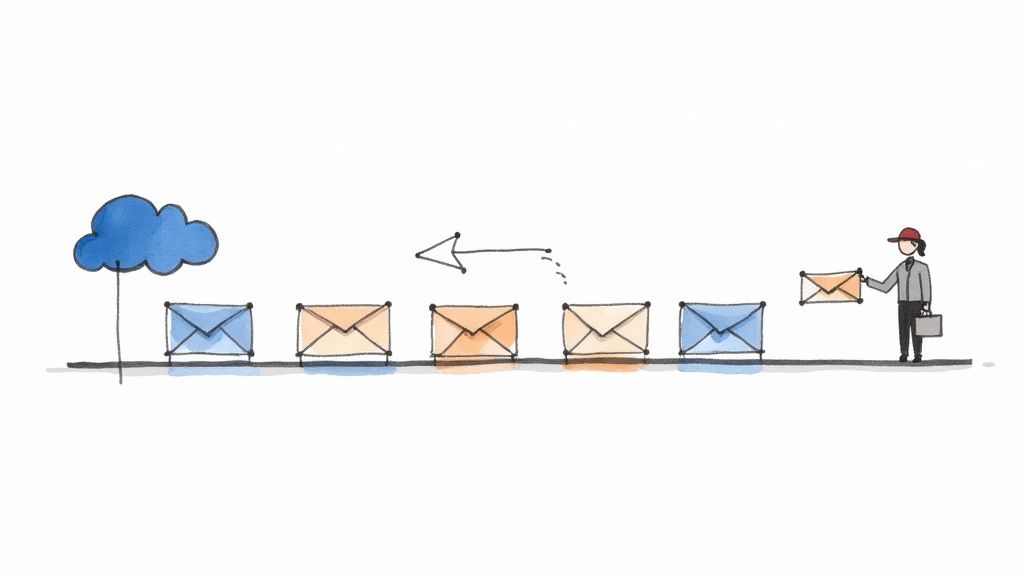

At its heart, an Azure Storage Queue solves a classic problem in building distributed systems: decoupling your application's components.

Think about it. When one part of your app (let's call it the "producer") needs to hand off work to another part (the "consumer"), a direct, real-time connection creates a fragile dependency. If the consumer suddenly slows down, gets bogged down, or even fails, the producer grinds to a halt right along with it. The whole system becomes brittle.

A queue elegantly sidesteps this by acting as a reliable buffer between them. The producer can just drop a message onto the queue and immediately move on, trusting that the work order is safely stored. Meanwhile, the consumer can pull messages off the queue and process them at its own pace, scaling up or down independently to handle the ebbs and flows of the workload. This simple but incredibly powerful pattern is a cornerstone of building resilient, high-performance cloud applications.

Azure Storage Queue at a Glance

To get a quick handle on where this service fits, here’s a look at its core characteristics. This table breaks down what you need to know to decide if it's the right tool for your job.

| Attribute | Description |

|---|---|

| Primary Use Case | Asynchronous task processing and decoupling system components. |

| Message Size Limit | Up to 64 KiB per message, perfect for lightweight tasks and instructions. |

| Queue Capacity | A single queue can hold up to 500 TiB of data, accommodating millions of messages. |

| Access Protocol | Simple and universal access via standard HTTP/HTTPS requests. |

| Ordering | Provides best-effort ordering but doesn't guarantee strict First-In, First-Out (FIFO). |

| Durability | Messages are reliably stored within an Azure Storage Account. |

This isn't just some niche tool; it's a foundational service that props up a huge range of applications. The incredible growth of Microsoft Azure really underscores how vital services like this are. By mid-2025, Azure had captured nearly 25% of the cloud market, with thousands of companies in software, education, and marketing relying on its infrastructure. If you're curious about the numbers, you can dig into some great Azure market share insights on turbo360.com.

Key Takeaway: Reach for an Azure Storage Queue when you need a simple, massive-scale, and seriously cost-effective buffer. It's ideal for managing background jobs, offloading long-running tasks, or creating a dependable communication channel between microservices without the overhead of a full-blown message broker.

Understanding the Core Architecture and Message Lifecycle

To really get the hang of Azure Storage Queue, it helps to peek under the hood. Its power lies in a simple, yet incredibly robust, architecture built for massive scale. The best way to think about it is like a physical warehouse system for your application's tasks.

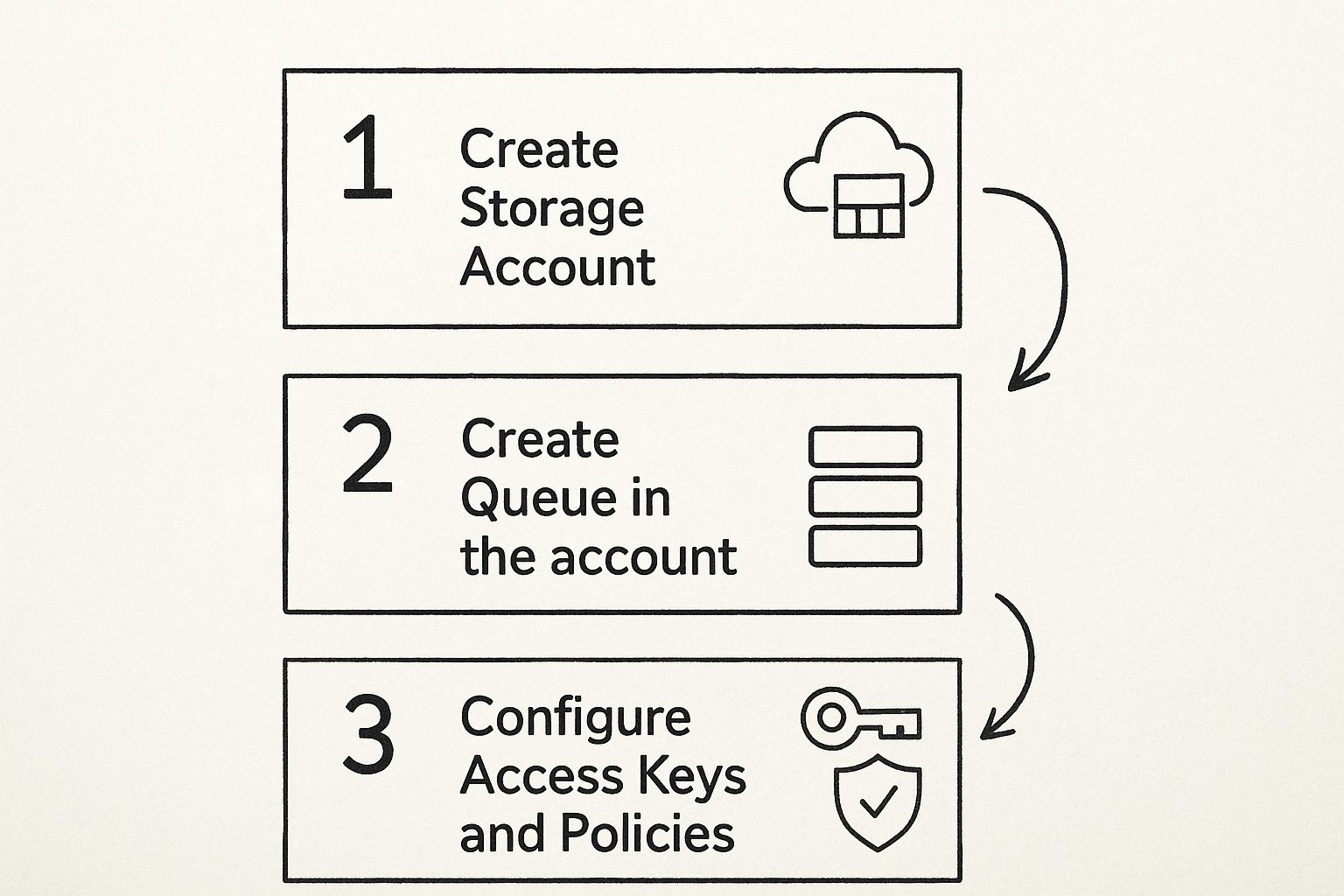

First, you have the Storage Account. This is the entire warehouse building, the main container in Azure that holds all your data services, including queues, blobs, and tables. Every single queue you create has to live inside a Storage Account.

Inside that warehouse, you have dedicated aisles for different products. In this analogy, a Queue is one of those aisles—a named list where you line up your tasks. You can have tons of queues within one storage account, each one handling a different job for your application.

Finally, you have the Messages. These are the individual boxes stacked in the aisle, each holding a small payload of information—up to 64 KiB in size. A message represents a single unit of work, like a request to generate a report or send a confirmation email.

The Journey of a Message

Every message goes on a specific journey to make sure work gets done reliably, without accidentally being processed twice. This lifecycle has a few key steps:

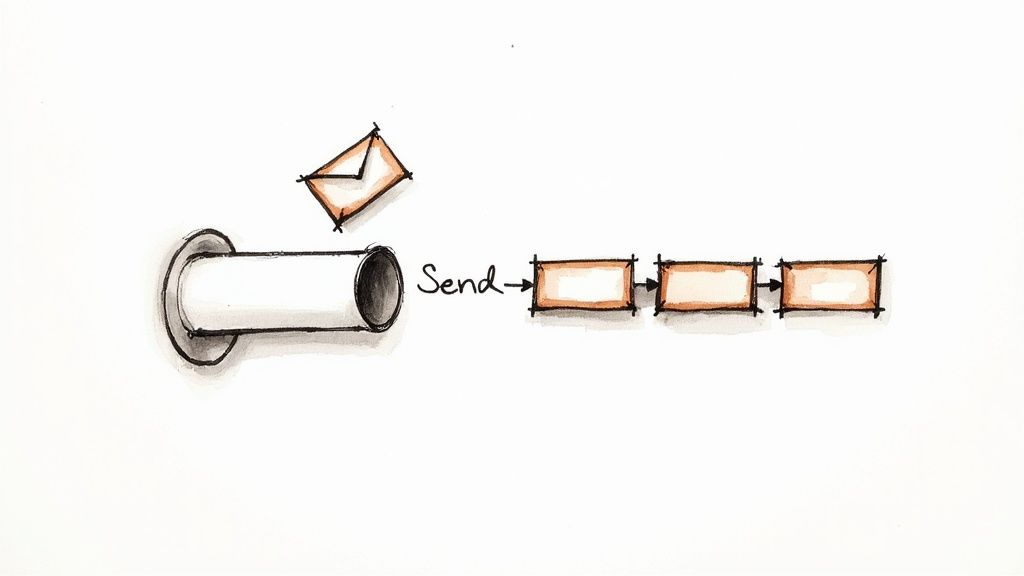

- Enqueue: A "producer" application adds a message to the back of the queue. At this point, the message is safely stored and just waiting for a worker to pick it up.

- Dequeue: A "consumer" (or worker role) asks for a message from the front of the queue. This is where some real magic happens.

- Process: The consumer gets to work, performing the task described in the message's content.

- Delete: Once the job is finished successfully, the consumer explicitly deletes the message from the queue for good.

This flow is the foundation for using Azure Storage Queues effectively. Before you can even send your first message, you have to get the basic structure in place.

As you can see, everything starts with that top-level Storage Account, which provides the security and endpoint for your queue to operate.

The Role of Visibility Timeout

So, what happens if a worker grabs a message and then crashes midway through its task? This is a classic problem in distributed systems. To prevent that message from being lost in limbo, Azure Storage Queue uses a clever feature called the visibility timeout.

When a consumer dequeues a message, it isn't actually removed from the queue. Instead, it’s just made invisible to all other consumers for a set period of time—the visibility timeout.

If the worker finishes its job within that timeout window, it deletes the message, and all is well. But if the worker crashes or the process fails, the timeout simply expires. The message automatically becomes visible again on the queue, ready for another worker to pick it up and try again.

This "peek-lock" pattern is what makes the service so resilient. It’s perfect for background jobs running in services like WebJobs, which you can learn more about Azure App Service in our detailed guide. By understanding this simple mechanism, you can build incredibly robust applications that handle failures gracefully, ensuring no task ever gets dropped on the floor.

Choosing Between Storage Queues and Service Bus Queues

When you're building an application in Azure and need to pass messages between different parts of your system, you'll quickly run into a fork in the road. On one side, you have Azure Storage Queues, and on the other, Azure Service Bus Queues. This isn't just a minor technical detail—it's a fundamental architectural decision that will shape your application's reliability, complexity, and cost.

Making the right call here means picking the tool that solves your problem perfectly, without saddling you with unnecessary complexity or a bigger bill than you need.

Azure Storage Queue vs Service Bus Queues

To make sense of the choice, it helps to use an analogy. Think of a Storage Queue as a simple, incredibly efficient conveyor belt. Its job is to move a massive number of small items from one place to another. It doesn't really care about the exact order they arrive in, just that they get there reliably to be processed. It's built for simplicity and huge scale, communicating over standard HTTP/HTTPS.

In contrast, Service Bus is more like a sophisticated, fully automated sorting facility at a major logistics hub. It’s packed with advanced features for handling complex workflows, guaranteeing that items are delivered in a specific order, managing transactions, and even automatically rerouting problematic packages to a special handling area.

To really nail down the differences, here’s a side-by-side look at what each service brings to the table.

| Feature | Azure Storage Queue | Azure Service Bus Queues |

|---|---|---|

| Message Ordering | Best-effort (No guarantee) | Guaranteed First-In, First-Out (FIFO) |

| Duplicate Detection | No built-in mechanism | Yes, configurable detection window |

| Dead-Lettering | Manual setup required ("poison queue") | Automatic dead-lettering for failed messages |

| Message Size | Up to 64 KB | Up to 1 MB (with Standard or Premium tier) |

| Transaction Support | No | Yes, supports atomic operations |

| Communication | HTTP/HTTPS | Advanced Message Queuing Protocol (AMQP) |

| Best For | Simple, high-volume background tasks | Complex workflows, transactional systems, and pub/sub scenarios |

This table lays it all out, but let's talk about what these features mean in the real world.

When Simplicity and Scale Are What You Need

You should reach for a Storage Queue when your needs are straightforward. If you just need to offload background tasks—like processing image thumbnails after an upload or firing off email notifications—Storage Queues are your best bet.

Imagine users are uploading thousands of images to your app. Each upload needs to kick off a task to resize the image into a few different formats. In this case, the order of processing doesn't matter, and each resizing job is completely independent. This is a textbook use case for a Storage Queue.

Here's why it works so well:

- Massive Throughput: A single queue can handle up to 2,000 messages per second, and a storage account can hold a staggering 500 TiB of data.

- Cost-Effectiveness: You primarily pay for storage and the number of operations, which becomes extremely cheap when you're dealing with high volumes.

- Architectural Simplicity: It's a lightweight, easy-to-implement way to decouple your application's components without the heavy lifting of a full message broker.

If your project is all about high-volume, non-critical background work, the simplicity and low cost of a Storage Queue are tough to beat.

When You Need Enterprise-Grade Features

On the flip side, if your application involves complex business logic or financial transactions, the advanced capabilities of Azure Service Bus become non-negotiable. It's a true enterprise message broker, offering features that Storage Queues just don't have.

Critical Distinction: Service Bus guarantees First-In, First-Out (FIFO) message ordering. If the sequence of operations is vital—like the steps in a user registration workflow or an e-commerce order—Service Bus is your only real choice.

Service Bus also provides features like automatic dead-lettering for failed messages and transaction support, which are deal-breakers for building robust, enterprise-grade systems. To get the full picture, you can explore our comprehensive guide on Azure Service Bus.

Ultimately, the choice boils down to this: start by asking yourself if you need strict ordering, transactions, or duplicate detection. If the answer is yes to any of those, your path leads directly to Service Bus. If not, the simplicity, scale, and cost-efficiency of an Azure Storage Queue make it the clear winner.

Unpacking Key Features and Scale Limits

When you start working with Azure Storage Queue, it's easy to think of it as just a simple list for messages. But that's just scratching the surface. It’s actually a highly-engineered service built for massive scale, and to get the most out of it, you need to understand both its powerful features and its performance boundaries.

Think of these limits not as constraints, but as guardrails. They help you design resilient systems that can handle huge workloads without stumbling. The sheer capacity for both message volume and throughput is one of its most impressive traits. It’s designed from the ground up to process millions of messages asynchronously, making it a perfect foundation for scalable background job processing. This lets your front-end applications stay snappy and responsive while worker roles plow through tasks in the background.

This scalability isn't just a vague promise; it's backed by very specific performance targets. For instance, a single queue can grow to a massive 500 tebibytes (TiB). That’s more than enough space for millions upon millions of messages. Each message can be up to 64 kibibytes (KiB), and an entire storage account can handle up to 20,000 one-kilobyte messages per second. For a deep dive into all the metrics, it's worth checking out the official Azure scalability targets.

Securing Your Messaging Infrastructure

Scale is great, but it’s worthless without strong security. An unprotected messaging layer can leak sensitive data and open up major holes in your application. Thankfully, Azure Storage Queue comes with multiple security layers to protect your messages both in transit and at rest.

You get fine-grained control over who can touch your queues and what they're allowed to do. Here are the main ways to lock things down:

- Azure Active Directory (Azure AD) Integration: This is the gold standard for modern apps. Using Azure AD lets you assign permissions to users, groups, or service principals through Azure's role-based access control (RBAC). This is a huge win because you no longer have to pass around shared keys, and you get much better security and auditing.

- Shared Access Signatures (SAS): A SAS token is a special URL that grants limited, temporary access to your storage resources. You can define exactly what someone can do (read, add, update, process), which queue they can access, and for how long the token is valid. It's ideal for giving clients limited access without handing over the keys to the kingdom.

- Storage Account Access Keys: These keys give you full, unrestricted access to your storage account. Treat them like a root password. They should only be used by trusted, server-side applications that genuinely need that level of control.

Pro Tip: Whenever you have a choice, go with Azure AD integration for authentication. It centralizes access management and gets rid of the headache and risk of managing and rotating storage account keys or SAS tokens.

By understanding these performance limits and using the built-in security features, you can build systems that are not only massively scalable but also secure from the start. Knowing the boundaries—like the 2,000 messages per second target for a single queue—helps you architect solutions that can grow with your needs, avoid throttling, and keep your application dependable under pressure. This knowledge turns the Azure Storage Queue from a simple tool into a strategic part of any powerful, decoupled enterprise application.

Implementing Common Operations with Code Examples

Theory is great, but let's be honest—getting your hands dirty with code is where the real learning happens. This section is all about rolling up our sleeves and working directly with Azure Storage Queue. We'll walk through practical, real-world code examples using the modern .NET SDK to handle the day-to-day operations you'll actually need.

We're going to cover the entire lifecycle of a message. We'll start by creating a queue, then add some work to it, process that work, and finally clean up. Think of this as your go-to playbook for talking to queues programmatically. Every snippet is designed to be clear and straightforward.

Setting Up the Queue Client

Before you can do anything, you need a way to connect to your queue. That’s where the QueueClient comes in. This object is your gateway to Azure Storage Queue. It's lightweight and designed to be reused throughout your application, which is a key best practice for performance.

To get started, you just need two things:

- Your Azure Storage Account's connection string.

- The name of the queue you want to work with.

Here’s how you can initialize the client. For our examples, we'll pretend we have a queue named "image-processing-jobs".

// At the top of your file

using Azure.Storage.Queues;

// Your connection string and queue name

string connectionString = "YOUR_STORAGE_ACCOUNT_CONNECTION_STRING";

string queueName = "image-processing-jobs";

// Create a QueueClient which will be used to interact with the queue

QueueClient queueClient = new QueueClient(connectionString, queueName);

// Ensure the queue exists before we start using it

await queueClient.CreateIfNotExistsAsync();

That CreateIfNotExistsAsync() method is a lifesaver. It’s a simple, idempotent call that checks if the queue is ready for action. If it's already there, nothing happens. If not, it creates it for you. This tiny step prevents a lot of headaches and runtime errors down the road.

Adding and Retrieving Messages

With our client ready, let's get to the core of it: adding (enqueuing) and retrieving (dequeuing) messages. It’s a lot like a busy kitchen—one person puts an order ticket on the rail, and a chef grabs it to start cooking.

Enqueuing a Message

To add a message to the queue, you just call SendMessageAsync(). The message itself is a string, which is perfect for serialized data like JSON that describes the task at hand.

// Example: A message asking a worker to resize an image

string messageText = "{ "imageId": "img-12345", "targetSize": "500×500" }";

// Send the message to the Azure Storage Queue

await queueClient.SendMessageAsync(messageText);

Console.WriteLine($"Sent a message: {messageText}");

This operation is blazing fast. It lets your producer application offload the work and immediately move on to its next task.

Important Insight: Messages are stored as Base64-encoded strings. This ensures they can safely handle any type of data you throw at them. The good news is the SDK handles all the encoding and decoding for you behind the scenes, so you can just work with plain text.

Peeking at Messages

Sometimes, you need to see what's at the front of the line without actually taking the ticket. The PeekMessageAsync() method lets you do just that. It's a non-destructive way to inspect the next message.

// Peek at the next message without removing it from the queue

var peekedMessage = await queueClient.PeekMessageAsync();

Console.WriteLine($"Peeked message content: {peekedMessage.Value.Body}");

This is incredibly useful for debugging or for building monitoring tools that need to check the queue's health without interfering with the actual workers.

Processing and Deleting Messages

Now for the main event: the worker's job. A consumer application's workflow is a simple, robust loop.

- Receive a Message: You use

ReceiveMessageAsync()to pull a message from the queue. This action makes the message invisible to other consumers for a set period (the visibility timeout). - Process the Work: This is where your business logic kicks in—resizing an image, sending an email, whatever the task requires.

- Delete the Message: Once the job is done, you call

DeleteMessageAsync()using the message's uniqueMessageIdandPopReceipt. This permanently removes it from the queue, marking the work as complete.

Here’s what that entire "peek-lock-delete" pattern looks like in code:

// Ask the queue for a message

var receivedMessage = await queueClient.ReceiveMessageAsync();

if (receivedMessage.Value != null)

{

Console.WriteLine($"Processing message: {receivedMessage.Value.Body}");

// Simulate doing some work...

await Task.Delay(2000);

// Delete the message from the queue after successful processing

await queueClient.DeleteMessageAsync(receivedMessage.Value.MessageId, receivedMessage.Value.PopReceipt);

Console.WriteLine("Message processed and deleted.");

}

else

{

Console.WriteLine("No messages found in the queue.");

}

This pattern is the foundation of any resilient worker process. If your app crashes after receiving the message but before deleting it, no problem. The visibility timeout will eventually expire, and the message will reappear in the queue for another worker to safely pick up.

By the way, if you prefer managing Azure resources with scripts, you might find our guide on the Azure PowerShell module helpful for automating these kinds of cloud tasks.

2. Best Practices for Building Resilient and Performant Queues

Moving beyond a simple proof-of-concept to a truly production-ready solution means thinking strategically. It's one thing to drop a message onto an Azure Storage Queue; it's another thing entirely to build a system that can handle real-world stress and recover from the inevitable hiccup. These battle-tested practices are what separate a fragile application from a resilient one.

One of the first things you'll learn in the trenches is the importance of a solid retry strategy. In any cloud environment, temporary network blips and transient service issues are just part of the game. Instead of letting one failed attempt bring down your whole workflow, your worker application needs to try again. The best way to do this is with an exponential backoff algorithm—wait a short time after the first failure, a bit longer after the second, and so on. This simple technique prevents your app from hammering a service that might just need a moment to recover.

Design for Resilience and Efficiency

Beyond simple retries, how you design your messages and processing logic is what truly builds a fault-tolerant system. Two principles are absolutely fundamental here: idempotency and message size.

-

Design Idempotent Messages: An operation is idempotent if you can run it ten times and get the same result as running it just once. Since a message might get processed more than once during a retry, this is a non-negotiable. For instance, if a worker's job is to update a user's status, it should always check the current status first before making a change. This prevents all sorts of messy, unintended side effects.

-

Keep Messages Small: Remember that every message has a strict 64 KiB limit. This isn't just a constraint; it's a design guideline. It pushes you to send small, focused commands instead of bulky data blobs. If you need to process a large file, the right move is to upload it to Azure Blob Storage first, then just pop the file's URL into the queue message. This keeps your queue zippy and your operations lean.

Key Takeaway: You have to build your system with the assumption that things will fail. By making your message handlers idempotent, you remove the risk and uncertainty from retries, leading to a far more stable and predictable application.

Optimize for Cost and Performance

Once you've built a resilient foundation, you can start fine-tuning for performance and cost. A few small tweaks in how you interact with the queue can have a massive impact on your throughput and your monthly bill, especially as you scale.

Message batching is a perfect example. Instead of pulling messages down one by one, your worker can grab up to 32 messages in a single go. This drastically cuts down on API calls, which directly lowers your transaction costs and speeds up the entire processing pipeline.

Another critical pattern is creating your own dead-letter queue. You will eventually encounter "poison messages"—messages that your worker can't process, no matter how many times it retries. Letting them sit in the main queue is a recipe for disaster. The standard practice is to have your worker logic move these stubborn messages to a separate queue (often named something like <queuename>-poison). This gets the problem message out of the way, allows you to inspect it later, and keeps the main queue flowing smoothly.

It's this kind of robust, thoughtful design that makes Azure Storage Queue a trusted choice for mission-critical workloads. In fact, it's a core part of a platform trusted by an estimated 85–95% of Fortune 500 companies. You can read more about Azure's role in the enterprise on Turbo360.com.

Common Questions About Azure Storage Queue

When you first start digging into Azure Storage Queue, a few questions almost always pop up. They usually circle around message reliability, how to deal with failures, and whether you can count on messages being processed in order. Getting these concepts straight is fundamental to building a solid, dependable system on top of this service.

Let's tackle one of the biggest concerns right away: message durability. What happens if a worker process grabs a message and then crashes? Is the message lost forever?

Thankfully, no. The magic here is a feature called the visibility timeout, which is part of a two-step deletion process. When a consumer reads a message, the queue doesn't delete it. Instead, it just hides it, making it invisible to other consumers for a set period. If the worker finishes its job successfully, it sends a separate command to permanently delete the message.

But if that worker crashes or the timeout expires, the message simply reappears in the queue, ready for another worker to pick it up. This "peek-lock" pattern is the bedrock of reliability in Storage Queue, ensuring that temporary glitches don’t cause you to lose data.

What About Messages That Always Fail?

So, what if a message is fundamentally broken? It gets picked up, a worker crashes, it reappears, another worker tries, and the cycle repeats. This is what we call a "poison message," and if you're not careful, it can grind your whole system to a halt.

While Azure Storage Queue doesn't have a built-in "dead-letter queue" like its cousin, Azure Service Bus, it gives you everything you need to create your own. This is a standard and highly recommended best practice.

Here’s the game plan:

- Check the Dequeue Count: Every time a message is retrieved, the queue increments a

DequeueCountproperty. Your worker should always check this number first. - Define a Limit: Decide on a reasonable retry limit for your application. For many scenarios, 5 attempts is a good starting point.

- Move the Poison: If the

DequeueCountgoes past your limit, the worker's logic should stop trying to process it. Instead, it should copy the message to a separate queue (often named something likemyqueue-poison) and then delete the original.

This strategy effectively quarantines the problematic message, letting the rest of your queue flow smoothly. Later, you can inspect the poison queue to debug the issue without having to take down your live system.

Can I Get Guaranteed Message Order?

This is another big one. People often assume a queue is strictly First-In, First-Out (FIFO). With Azure Storage Queue, is that a safe assumption?

The short answer is no.

Azure Storage Queue offers best-effort ordering, but it absolutely does not guarantee FIFO delivery. It's built for massive scale, with many different nodes handling requests. This means the exact order you put messages in isn't necessarily the exact order you'll get them out.

If your application requires strict, in-order processing—like handling steps in a financial transaction or a user signup wizard—then Azure Storage Queue isn't the right choice. For those ironclad ordering guarantees, you'll want to use Azure Service Bus Queues, which are designed specifically for that purpose.

For the vast majority of background jobs where the exact order doesn't matter, the incredible scalability and simplicity of Storage Queues make it a perfect fit.

Ready to master the skills needed for the Azure Developer certification? AZ-204 Fast provides interactive flashcards, comprehensive cheat sheets, and dynamically generated practice exams to ensure you're fully prepared. Stop cramming and start learning effectively with our research-backed platform. Check out our tools at az204fast.com.

Leave a Reply