A solid SQL database backup strategy is more than just running a few scripts; it's a careful blend of business understanding and technical know-how. At its heart, it's about knowing your business needs—your RPO and RTO—and then picking the right tools for the job, like full, differential, and transaction log backups. Getting these fundamentals right from the start is what separates a reliable recovery plan from a recipe for disaster.

Building Your Bedrock Backup Strategy

Before you write a single line of T-SQL or touch the Azure portal, pause and think about the big picture. A truly resilient backup plan isn't built on commands; it’s built on a deep understanding of your business requirements. I've seen too many people jump straight to the technical side, only to find their backups can't deliver when a real crisis hits.

The whole process really boils down to answering two critical questions that will become the pillars of your entire data protection strategy.

Defining Your Recovery Objectives

Everything you do from this point on will flow from your Recovery Point Objective (RPO) and Recovery Time Objective (RTO). These aren't just abstract terms; they are concrete business metrics that directly impact how well you can weather a storm.

-

Recovery Point Objective (RPO): This is all about data loss. It asks, "What's the maximum amount of data we can afford to lose?" If your business sets an RPO of 15 minutes, your backups must be able to restore the database to a state no more than 15 minutes before the failure. A low RPO is more complex and costly, while a higher one is simpler but risks losing more data.

-

Recovery Time Objective (RTO): This is all about downtime. It asks, "How quickly do we need to be back up and running?" An RTO of one hour means the entire restore process—from start to finish—has to be completed within 60 minutes. Hitting a tight RTO requires fast hardware, well-tested scripts, and a team that knows exactly what to do.

Don't make the mistake of seeing RPO and RTO as purely technical decisions. They are business decisions, first and foremost. The business must define its tolerance for downtime and data loss; your job is to build the technical solution that meets those targets.

Choosing the Right SQL Backup Types

With your RPO and RTO clearly defined, you can now choose the right mix of backup types to achieve them. SQL Server gives you three main options, and each plays a specific role in a well-rounded strategy.

-

Full Backups

A full backup is the foundation of your recovery plan. It’s a complete copy of the entire database, including a portion of the transaction log. While they are absolutely essential, running them too often on a large, busy database can be a major drain on storage and I/O. Think of it as your reliable, complete baseline. -

Differential Backups

These are the smart, efficient backups. A differential backup only captures the data that has changed since the last full backup. They’re much smaller and faster to create, making them perfect for bridging the gap between full backups. A common and effective pattern is to take a full backup once a week and a differential every day. -

Transaction Log Backups

This is your secret weapon for hitting a low RPO. A log backup captures all the transaction log records generated since the last time a log backup was taken. By scheduling these frequently—say, every 10-15 minutes—you enable what's called a point-in-time recovery. This lets you restore a database to a specific moment, like just before a user accidentally wiped out a critical table.

Understanding SQL Server Recovery Models

The final piece of this strategic puzzle is the database recovery model. This setting dictates how transactions are logged, which in turn determines which backup and restore options are even available to you. Picking the wrong one can completely undermine your entire backup strategy.

There are three recovery models to choose from:

-

Full: This is the gold standard for production databases. It fully logs every transaction, which is a prerequisite for taking transaction log backups. The Full model gives you the most power and flexibility, including point-in-time restores.

-

Simple: In this model, the log space is automatically reclaimed, keeping the log file small. The major trade-off? You can't take transaction log backups. This means you can only restore to the time of your last full or differential backup, making it a poor choice for any system where you can't afford to lose data.

-

Bulk-Logged: This is a specialized, hybrid model. It acts like the Full model but minimally logs certain bulk operations (like rebuilding a large index) to boost performance. While it saves log space, it can complicate point-in-time recovery scenarios, so use it with caution.

For any plan designed to backup a SQL database that's critical to your business, the Full recovery model is almost always the right answer. It’s the only model that provides the granularity you need to meet demanding RPO and RTO targets.

Hands-On Database Backups with T-SQL Scripts

While portals and GUIs are great for quick tasks, nothing gives you the raw power and fine-grained control over your backups like good old T-SQL. When you get your hands dirty with scripting, you move beyond simple point-and-click operations and start building a genuinely resilient, customized SQL database backup process. It’s all about taking full control to make sure your backup routines are truly optimized for your environment.

The BACKUP DATABASE command is your entry point, but its real value comes from the powerful options that can make a world of difference in efficiency and reliability. Let's look at the practical scripts that I and other DBAs use to keep production systems safe.

Fine-Tuning Backups with Core Options

Just running a backup isn't enough; you have to make it efficient. Two of the most crucial clauses I use are WITH COMPRESSION and WITH CHECKSUM. Honestly, I consider these non-negotiable for almost any production backup.

-

WITH COMPRESSION: This is a game-changer. It can shrink your backup files by 50-70% or even more. That doesn't just save a ton of disk space—it also speeds up the entire backup process because there’s simply less data to write to disk. -

WITH CHECKSUM: Think of this as your first line of defense against data corruption. It tells SQL Server to verify every page as it's being written to the backup file. If it finds a bad page, the backup fails immediately, alerting you to a serious problem before you end up with a useless backup.

Putting these together, a solid full backup command looks clean and simple.

BACKUP DATABASE [MyProductionDB]

TO DISK = 'D:\Backups\MyProductionDB_FULL.bak'

WITH

COMPRESSION,

CHECKSUM,

STATS = 10;

I like to add STATS = 10 for a bit of user-friendliness. It gives you progress updates in 10% chunks, so you're not just staring at a blinking cursor, wondering if it's working.

Scripting Different Backup Types

A robust strategy always involves a mix of backup types. Here’s how you can script each one.

A differential backup, which captures all changes since the last full backup, just needs one tweak: the WITH DIFFERENTIAL clause.

BACKUP DATABASE [MyProductionDB]

TO DISK = 'D:\Backups\MyProductionDB_DIFF.bak'

WITH

DIFFERENTIAL,

COMPRESSION,

CHECKSUM;

For transaction log backups—the key to point-in-time recovery—the command is a bit different. Just remember, you can only run log backups if your database is in the Full or Bulk-Logged recovery model.

BACKUP LOG [MyProductionDB]

TO DISK = 'D:\Backups\MyProductionDB_LOG.trn'

WITH

COMPRESSION,

CHECKSUM;

A pro tip I swear by: always script your backups with dynamic file names. Include the database name and a timestamp. This stops you from accidentally overwriting old backups and makes finding the right file so much easier when the pressure is on during a restore.

Tackling Very Large Databases

What do you do when your database swells into a multi-terabyte beast? Backing up to a single, massive file becomes a huge bottleneck for both backups and restores. The answer is backup striping—splitting the backup across multiple files.

SQL Server is smart enough to write to all these files at the same time. If you can point each file to a different physical disk, you can see a dramatic boost in backup speed.

Here’s what that looks like, striping a full backup across four separate files and drives.

BACKUP DATABASE [VeryLargeDB]

TO

DISK = 'D:\Backups\VeryLargeDB_1.bak',

DISK = 'E:\Backups\VeryLargeDB_2.bak',

DISK = 'F:\Backups\VeryLargeDB_3.bak',

DISK = 'G:\Backups\VeryLargeDB_4.bak'

WITH

COMPRESSION,

CHECKSUM,

STATS = 5;

This approach makes the entire operation faster and much more manageable.

Embracing Modern Compression

The standard compression in SQL Server has served us well for years, but things are always improving. One of the most exciting recent developments is the Zstandard (ZSTD) compression algorithm. In tests on a 25.13 GB database, ZSTD hit a backup speed of 714.558 MB/sec. For comparison, the traditional algorithm clocked in at 295.764 MB/sec with similar compression levels. That’s a massive performance gain.

You can dive deeper into these benchmarks and see how to use the new algorithm by checking out this fantastic analysis of SQL Server 2025's new backup magic.

By going beyond the basic commands and using these real-world T-SQL techniques, you can build a SQL database backup plan that’s not just dependable, but incredibly efficient.

Managing Backups in Azure SQL Database

https://www.youtube.com/embed/dzkl6ZCQO9s

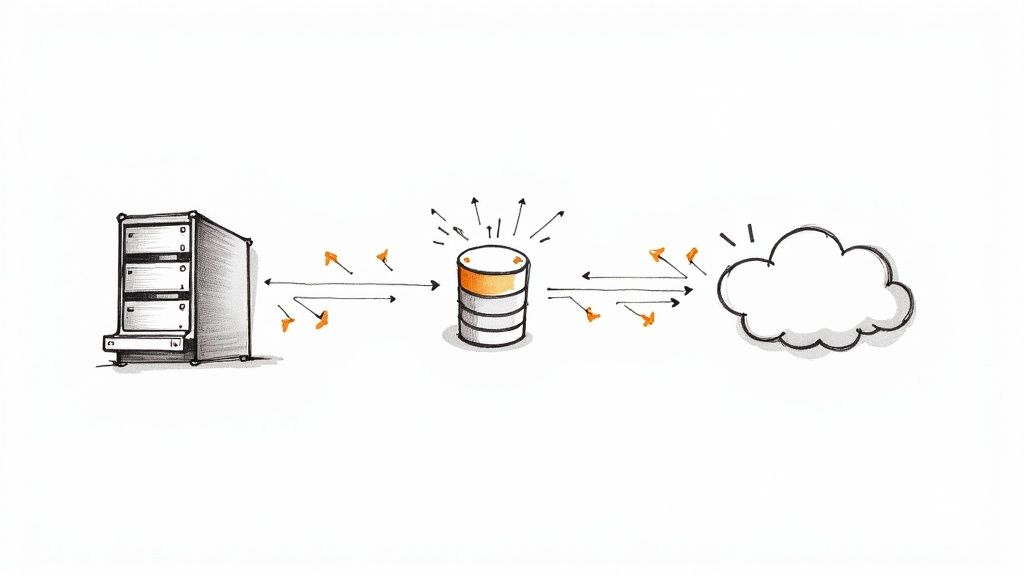

When you make the leap from a traditional on-premises server to an Azure SQL Database, your whole operational playbook changes. This is especially true for backups. The days of manually scripting and scheduling jobs are mostly over. In Azure, you hand over that daily grind, but you're still in the driver's seat when it comes to understanding and managing your data's safety.

Azure SQL Database completely redefines backup management by giving you a powerful, automated service right out of the box. You'll likely never need to write a BACKUP DATABASE command for routine protection again. Behind the scenes, Azure is constantly running a mix of full, differential, and transaction log backups for you.

This automation is the magic that enables one of Azure's most powerful features: Point-in-Time Restore (PITR). Depending on your service tier, you can rewind your database to any specific second within a retention window, which typically falls between 7 and 35 days. It’s your go-to solution for those heart-stopping moments, like a developer dropping a table or running a DELETE without a WHERE clause.

Configuring Long-Term Retention for Compliance

The built-in PITR is a lifesaver for operational recovery, but what about the long haul? Many industries have strict rules that require you to keep backups for months or even years. For that, you need Long-Term Retention (LTR).

LTR lets you create policies to automatically copy specific full backups into separate Azure Blob Storage, where they can be kept for up to 10 years. You can set up a simple policy that ensures you stay compliant, then forget about it.

A common LTR policy I've seen in the field looks something like this:

- Keep the weekly backup from the last 8 weeks.

- Keep the first weekly backup of every month for 12 months.

- Keep the first weekly backup of the year for 7 years.

Setting this up is a breeze. From the Azure Portal, just go to your SQL server, find "Backups," and click on the "Retention policies" tab. From there, you can pick the databases you want to protect and configure the weekly, monthly, and yearly schedules. It’s a few clicks for a ton of long-term security.

Trusting the automation is key, but so is knowing how to verify it. I make it a habit to regularly check the "Available backups" for a database in the portal. This screen is your confidence dashboard—it shows you the earliest PITR point, the latest restore point, and all your available LTR backups.

The Ultimate Safety Net: Geo-Redundant Backups

What’s the plan if an entire Azure region goes down? It’s the worst-case scenario, but it’s one that Azure is built to handle. By default, your database backups are stored in Geo-Redundant Storage (GRS). This doesn't just mean your backups are copied within your primary region; they are also being asynchronously replicated to a paired Azure region hundreds of miles away.

This geo-replication is your ultimate disaster recovery parachute. If a regional catastrophe occurs, you can perform a geo-restore to bring your database back online in the paired region using the last available replicated backup. The best part? It's enabled by default, giving you a level of resilience that would be incredibly complex and costly to build on your own. This type of built-in resilience is a core principle in Azure's platform services. To see how it applies to web hosting, you can read our detailed guide on what Azure App Service is and its capabilities.

By getting a handle on these layers of protection—from automated PITR to configurable LTR and built-in GRS—you can move from being a script-runner to a true strategist for your SQL database backup plan in the cloud. You get to ensure your data is safe, compliant, and always recoverable.

Automating Your Backups with PowerShell and the Azure CLI

If you're managing more than a handful of databases, clicking through a portal for backups just isn't sustainable. Manual work doesn't scale well, it’s a breeding ground for human error, and frankly, it eats up time you don’t have. This is where command-line tools like PowerShell and the Azure CLI stop being nice-to-haves and become absolutely essential for modern data management.

By scripting your backups, you can shift from being a reactive admin putting out fires to proactively managing your entire data environment. Let's dig into some practical scripts you can adapt right now to bring some much-needed efficiency and consistency to your operations, whether your servers are in your own data center or in the cloud.

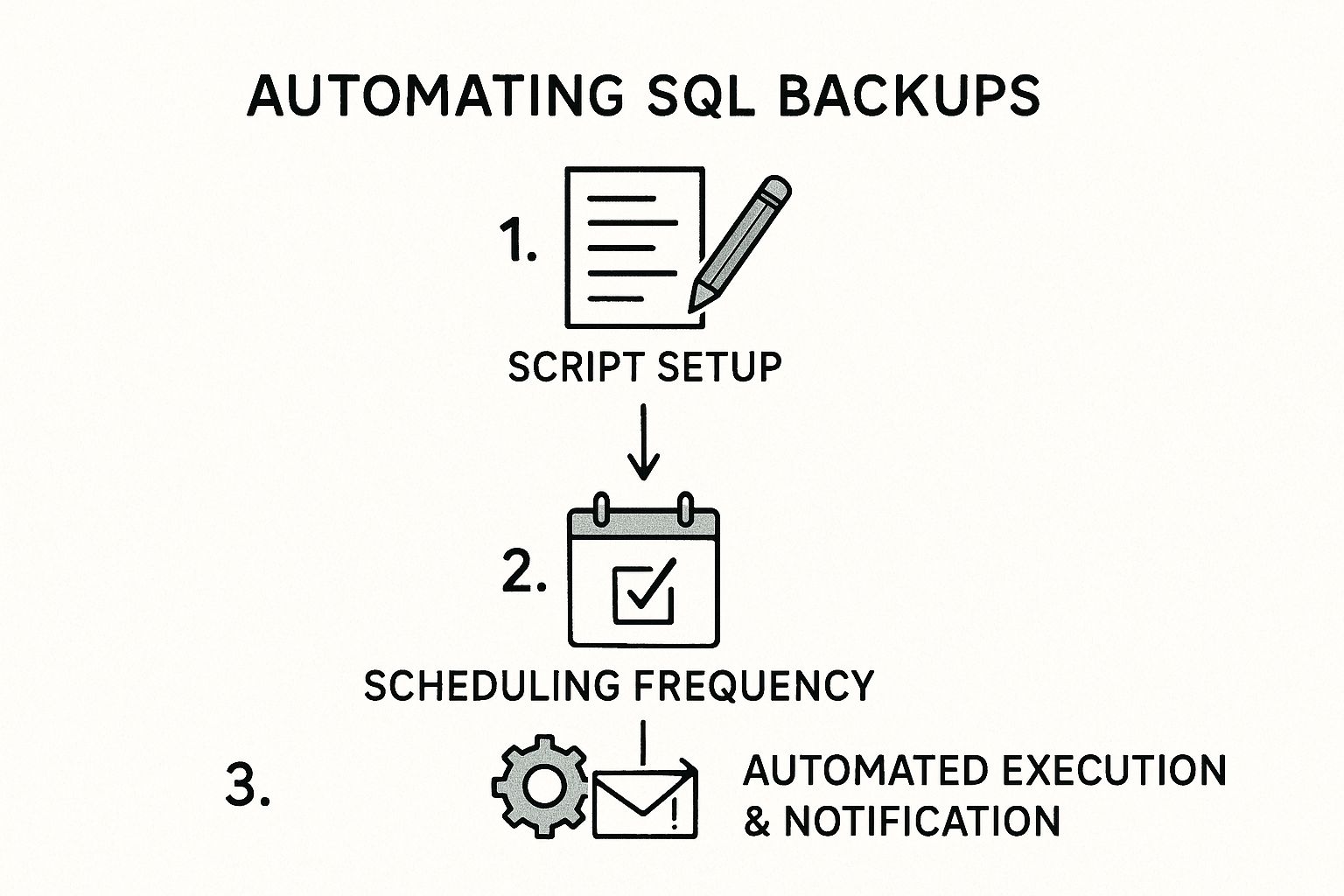

This diagram shows how you can turn a tedious manual task into a reliable, hands-off system.

It’s all about moving from one-off script development to a fully scheduled and monitored workflow.

PowerShell for On-Premises SQL Server

When you're working with on-premises SQL Server instances, PowerShell is your best friend. The community-driven dbatools module is a powerhouse, but you can get a ton done with the native SqlServer module that comes with SQL Server Management Studio. The main command you'll get to know is Backup-SqlDatabase.

A basic full backup command is simple enough:

Backup-SqlDatabase -ServerInstance "YourServerName" -Database "YourDatabase" -BackupFile "D:\Backups\YourDatabase_Full.bak"

But scripting is where the magic really happens. Let's say you need to back up all the user databases on a server. Instead of a mind-numbing, one-by-one process, you can string commands together.

Get-SqlDatabase -ServerInstance "YourServerName" | Where-Object { $.Name -ne "master" -and $.Name -ne "model" -and $.Name -ne "msdb" -and $.Name -ne "tempdb" } | Backup-SqlDatabase

This slick one-liner grabs all user databases and feeds them straight into the backup command, giving you a consistent backup sql database operation across the entire instance. Just drop this script into Windows Task Scheduler, set it to run daily, and you've automated a critical task.

I once had to standardize backup procedures across two dozen servers for a new client. Scripting this with PowerShell saved us what would have been days of tedious clicking. More importantly, it ensured every single server used the exact same compression and verification settings, which eliminated the configuration drift we were fighting.

Azure CLI for Cloud-Scale Management

When your data lives in Azure, the Azure CLI offers a lightweight, cross-platform tool for managing everything from the command line. It's fantastic for weaving backup management into your CI/CD pipelines or for making changes across many resources at once. The command to know here is az sql db backup.

For example, kicking off a long-term retention (LTR) backup for an Azure SQL Database is a single, clean command.

az sql db ltr-backup create

–resource-group YourResourceGroup

–server YourServerName

–name YourDatabaseName

That’s handy, but the real power comes when you need to apply a setting at scale. Imagine a new compliance rule requires you to update the LTR policy for every database on a server. Doing that in the portal is a nightmare; a script makes it trivial.

Here’s how you could set a policy to keep weekly backups for 10 weeks, monthly backups for 12 months, and yearly backups for 5 years:

az sql db ltr-policy set

–resource-group YourResourceGroup

–server YourServerName

–name YourDatabaseName

–weekly-retention "P10W"

–monthly-retention "P12M"

–yearly-retention "P5Y"

–week-of-year 1

Wrap this in a simple loop that reads a list of your databases, and you can update hundreds of policies in minutes. That kind of automation is what keeps you sane while ensuring compliance in a large cloud environment. If you're just getting started with Azure's command-line tools, our guide on the Azure PowerShell module is a great place to learn the fundamentals.

Choosing Your SQL Backup Method

Deciding which tool to use often comes down to where your database lives and how much control you need. This table breaks down the most common methods to help you pick the right one for the job.

| Method | Best For | Control Level | Environment | Automation |

|---|---|---|---|---|

| Azure Portal UI | Beginners, one-off tasks, visual checks | Low | Azure | Manual |

| SSMS UI | On-prem admins, visual workflow | Medium | On-Premises | Manual |

| PowerShell | On-prem automation, granular control | High | On-Premises / Azure | Excellent |

| Azure CLI | Cloud automation, DevOps pipelines | High | Azure | Excellent |

| T-SQL Scripts | Deep customization, legacy systems | Very High | On-Premises / Azure | High (via Agents) |

Ultimately, PowerShell and the Azure CLI are built for scale. While the UI is great for a quick look or a single task, automation is the only way to reliably manage a growing data estate without losing your mind.

The Unskippable Step: Validating and Testing Your Backups

Let's be blunt: an untested backup is nothing more than a hope. It’s not a recovery plan. It's the digital equivalent of Schrödinger's cat—you have no idea if your data is alive or dead inside that file until you actually look. This validation step is easily the most important part of any data protection strategy, and sadly, it's also the most frequently skipped.

It's tempting to see that "backup completed successfully" message and feel a sense of security. But all that message confirms is that a file was created. It tells you nothing about whether that file is actually restorable, free of corruption, or even contains the data you think it does. Moving from hoping your SQL database backup will work to knowing it will is what separates the pros from the amateurs.

The First Pass: RESTORE VERIFYONLY

For a quick spot-check, you can use the RESTORE VERIFYONLY command. This T-SQL command is a basic checkup. It looks at the backup file's header to confirm it's readable and appears to be a legitimate SQL Server backup. The best part? It’s lightning-fast and uses minimal server resources.

RESTORE VERIFYONLY

FROM DISK = 'D:\Backups\MyProductionDB_FULL.bak';

While it’s a good first step, relying only on VERIFYONLY is a recipe for disaster. It doesn't inspect the internal structure of your data pages or guarantee the data within is uncorrupted. Think of it as checking that a book has a cover and the right number of pages, but never actually reading the words to see if they make sense.

An untested backup is a liability waiting to happen. True confidence doesn't come from a "backup successful" message; it comes from regularly proving you can restore your data, intact and usable, when it matters most.

The Real Test: Full Restore Drills

The undisputed gold standard for backup validation is performing regular, full restore drills. This means taking your production backups and restoring them onto a separate, non-production server. This simple exercise validates two critical things at once: that your backup file is physically sound and that the database inside is logically intact.

Your test environment doesn't need to be a mirror image of your production server's power, but it absolutely must have enough disk space to hold the restored database. Smart organizations automate this entire process, scripting a job that grabs the latest backup, restores it to a test instance, and then runs a series of checks.

Verifying Data Integrity with DBCC CHECKDB

Once the database is restored, you're not done yet. The final, non-negotiable step is to run DBCC CHECKDB against that freshly restored copy. This command is the ultimate health check for your database, performing an exhaustive analysis of all objects, pages, and structures to hunt down any signs of corruption.

DBCC CHECKDB ('MyRestoredDB') WITH NO_INFOMSGS, ALL_ERRORMSGS;

Running this command is the only way to be certain that the data you've backed up is not just present, but also consistent and usable. Finding corruption here, on a test server, is a routine administrative task. Finding it during a real production outage is a career-defining crisis.

Managing Performance on Massive Databases

As databases swell in size—with industry data showing growth around 30% annually—the backup and restore validation process can become a real resource hog. Using native backup compression has become a standard practice, often shrinking space requirements by up to 70% and helping you meet your Recovery Time Objectives (RTO). For more on this, check out how you can improve backup efficiency in modern SQL Server versions.

When it comes to validation, scheduling is everything. Run your restore drills during off-peak hours, like overnights or weekends, to avoid impacting other development or test environments. This systematic approach ensures your testing doesn't become a bottleneck while building genuine, battle-tested confidence in your recovery plan. This kind of structured repetition aligns with proven learning principles, a concept you can explore further in our guide on how to use flashcards for studying.

Answering Your Top SQL Database Backup Questions

When you're dealing with SQL database backups, a few key questions always seem to pop up. Let's tackle them head-on with some practical, real-world answers that I've picked up over the years. This is the stuff that helps you move from theory to a solid, reliable backup strategy.

Can I Back Up a Database While It's Being Used?

You absolutely can, and in fact, you have to. SQL Server was built from the ground up to handle backups on live databases with active connections. There's no need to kick users out or take the system offline.

It works by using a kind of snapshot. The moment you start the backup, SQL Server locks in the state of the data, ensuring the backup file is transactionally consistent. Any transactions that happen after the backup starts won't mess it up. Yes, there's a slight performance hit, but on modern systems, especially when using the COMPRESSION option, it's usually negligible.

How Often Should I Run My Backups?

This is the million-dollar question, and the honest answer is, "it depends." But what it really depends on is your Recovery Point Objective (RPO)—how much data can the business stand to lose?

Once you have that answer, you can build a schedule. A battle-tested strategy for many businesses looks something like this:

- Weekly Full Backups: Kick this off on a quiet day, like Sunday at 2 AM. This is your baseline, your complete copy.

- Daily Differential Backups: Run these every night, say at 10 PM. They'll grab all the changes made since that last full backup, keeping your restore times faster than just using logs.

- Frequent Transaction Log Backups: During business hours, this is your lifeline. Backing up the transaction log every 15 minutes is a common and effective target.

With this setup, the absolute worst-case scenario means you lose no more than 15 minutes of work.

Don't forget: Your backup schedule is a direct reflection of your business's tolerance for data loss. If management says losing an hour of transactions is unacceptable, then a simple daily backup plan just won't cut it.

What's the Real Difference Between the Full and Simple Recovery Models?

The recovery model you choose for a database is a critical setting. It dictates how transactions are logged, which directly impacts the types of backups you can even perform. Getting this wrong can completely derail your recovery plan.

-

Simple Recovery Model: Think of this as "easy mode." It automatically clears out the transaction log to keep it from growing. The massive trade-off? You cannot perform transaction log backups. This means you can only restore your database to the point of your last full or differential backup. It's really only meant for dev/test environments where losing data isn't a big deal.

-

Full Recovery Model: This is the non-negotiable standard for any production database. It meticulously logs every transaction and holds onto it until you specifically back up the transaction log. This is the only model that enables point-in-time recovery and lets you meet a tight RPO.

Do I Really Need to Back Up the System Databases?

Yes. Emphatically, yes. While your user databases hold the application data, system databases like master and msdb are the brain and central nervous system of your SQL Server instance.

- The

masterdatabase contains all your server-level configurations, logins, and pointers to all your other databases. If you losemaster, you're essentially rebuilding your server's identity from scratch. - The

msdbdatabase is home to the SQL Server Agent. It stores all your jobs, schedules, alerts, and your entire backup history. Losingmsdbmeans all of your carefully crafted automation is gone.

Treat master and msdb with the same respect as your user databases. Back them up regularly and always after you make a significant server-level change.

Mastering Azure concepts like backup and recovery is a critical skill for passing the AZ-204 exam. AZ-204 Fast provides all the tools you need—from interactive flashcards to dynamic practice exams—to build deep knowledge and pass with confidence. Start your focused study journey at https://az204fast.com.

Leave a Reply